Tricking your Super-fancy Neural Net

While cleaning up my reading list earlier today, I came across this really cool paper from CVPR 2015 with the hard to ignore title: Deep Neural Networks are Easily Fooled.

I think anyone who has been working with Deep Learning systems (or even Machine learning algorithms to a large extent) has come across multiple examples where you look at the result and wonder “How on earth did I get that?”. I have fond memories of one of my projects at IIIT-B which involved sketch classification, where my system was 99% confident that my sketch of an aeroplane was in fact a dog, leading one of my teammates to wonder if the dog was, in fact, inside the plane!

The somewhat blackbox nature of Deep Learning systems only adds to the mystery, which is why I feel papers like this help provide some context to “how” we’re solving the problem at hand rather than the “what”.

Such Accurate, Much Wow!

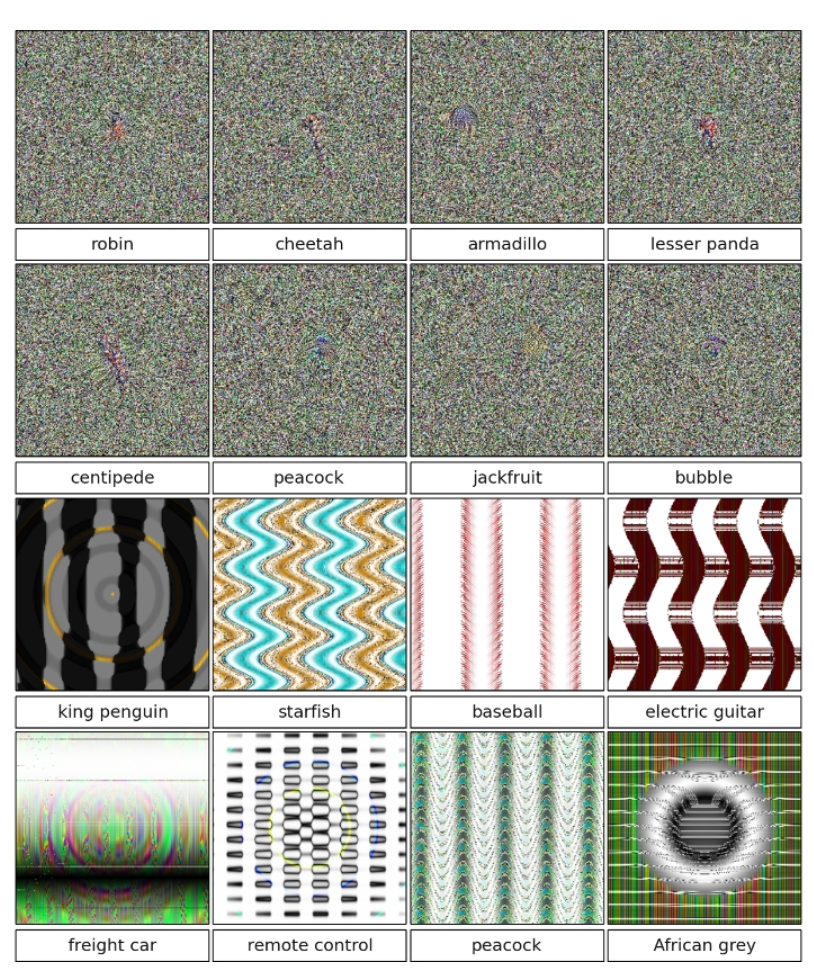

For obvious reasons, the question to ask is how do you come up with these trick images?

The novel images we test DNNs on are produced by evolutionary algorithms (EAs). EAs are optimization algorithms inspired by Darwinian evolution. They contain a population of "organisms" (here, images) that alternately face selection (keeping the best) and then random perturbation (mutation and/or crossover). Which organisms are selected depends on the fitness function, which in these experiments is the highest prediction value a DNN makes for that image belonging to a class [...] Here, fitness is determined by showing the image to the DNN; if the image generates a higher prediction score for any class than has been seen before, the newly generated individual becomes the champion in the archive for that class.

They use different encodings to generate different types of incorrectly labelled images, including a direct encoding and an indirect encoding.

Another method they use is also pretty cool. They use gradient ascent (blog name FTW!) for this:

We calculate the gradient of the posterior probability for a specific class - here, a softmax output unit of the DNN - with respect to the input image using backprop, and then we follow the gradient to increase a chosen unitWe calculate the gradient of the posterior probability for a specific class - here, a softmax output unit of the DNN - with respect to the input image using backprop, and then we follow the gradient to increase a chosen unit’s activation.

This technique was earlier described in this paper.

What’s interesting is also that these examples use one DNN for generation, but generalize across multiple DNNs, which seems to imply the features recognised are all very similar (which is both good and bad imho). Absolutely beautiful discussion in the supplementary material as well, I thoroughly enjoyed reading this paper, and you should too!

Deep Neural Networks are Easily Fooled: High Confidence Predictions for Unrecognizable Images by Anh Nguyen, Jason Yosinski and Jeff Clune