Batch Normalization in Neural Networks

Read a couple of interesting papers today from 2015 about using Batch Normalization in neural networks. Batch Normalization basically means that we normalize each activation individually. The reason for this, quoting the original paper

Training Deep Neural Networks is complicated by the fact that the distribution of each layer's inputs changes during training, as the parameters of the previous layers change. This slows down the training by requiring lower learning rates and careful parameter initialization, and makes it notoriously hard to train models with saturating nonlinearities. We refer to this phenomenon as internal covariate shift.

Their paper is a fascinting deep dive into the math of how layers are affected by the input, and how this covariate shift can be reduced by applying batch normalizations. Using batch normalization means we can use higher learning rates (since gradients do not explode or vanish), making the network more resilient. In fact, in their results they showed how they could use the batch normalized network to achieve the same accuracy as the vanilla network with half the training steps, and with higher learning rates upto 14x fewer steps!

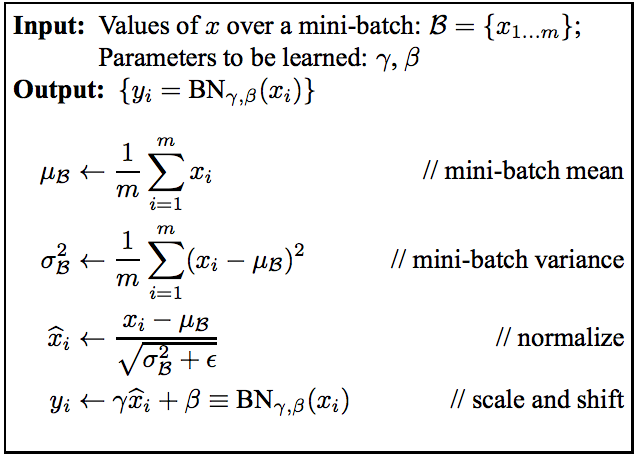

The Batch Normalization Transform

This paper set up a bunch of other interesting papers which explored the same concept of batch normalization, and I’ll talk about two of them below.

The first one notes that the original paper applies only to feed forward neural networks and attempts to explore batch normalization in Recurrent Neural Networks. They apply batch normalization on the input-to-hidden transition and oberved faster training and greater overfitting as well. The fact that the generalization performance didn’t seem to improve with batch normalization was opposite to what was seen for feedforward neural nets.

However, a couple of months ago, another paper visited the same idea albeit in a slightly different manner (in fact one of the authors is on both the papers). Instead of applying the normalization on the input-to-hidden transition, they apply it horizontally between timesteps, using the consideration that RNNs are deepest in the time direction. Lo and behold, they now have the state of the art results (greater generalizability) with lesser convergence time.

Truly an excellent set of papers exploring what seems, at first read, as a minor modification to an algorithm. Go on, read the papers!

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift Sergey Ioffe, Christian Szegedy

Batch Normalized Recurrent Neural Networks César Laurent, Gabriel Pereyra, Philémon Brakel, Ying Zhang, Yoshua Bengio

Recurrent Batch Normalization Tim Cooijmans, Nicolas Ballas, César Laurent, Çağlar Gülçehre, Aaron Courville